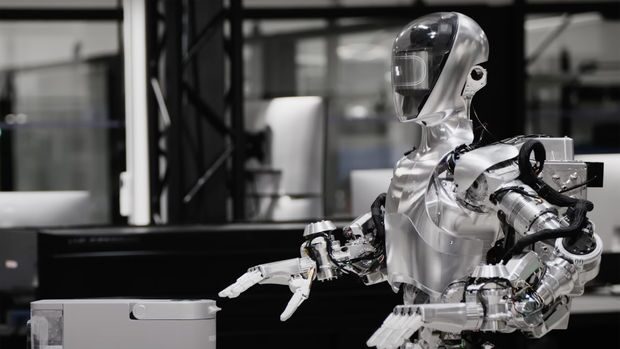

1X, a humanoid robot company supported by OpenAI, has released an impressive video showcasing their robot Eve’s ability to perform sequential tasks autonomously. This advancement brings humanoid robots closer to full autonomy, paralleling the rapid developments seen in AI since the launch of ChatGPT.

Sequential Task Mastery

1X aims to provide safe, intelligent androids to fulfill physical labor roles. The latest video from the company demonstrates Eve’s capability to autonomously complete a series of tasks. Despite these advancements, 1X acknowledges that this is just the beginning of their journey.

Previously, 1X developed an autonomous model capable of integrating multiple tasks into a single goal-conditioned neural network. However, when these multi-task models were small (less than 100 million parameters), adding data to correct the behavior of one task often negatively impacted the performance of others. While increasing the parameter count seemed like a solution, it resulted in longer training times and delayed the identification of necessary metrics to improve robot behavior.

Innovative Solution for Rapid Iteration

1X tackled this challenge by separating the process of rapidly improving task performance from integrating multiple abilities into a single neural network. They developed a voice-controlled natural language interface to chain short-term abilities across multiple small models into long-term behaviors.

In the video, humans direct the robot, showcasing its ability to perform long-term tasks through skill chaining. Although humans can easily perform long-term tasks, chaining multiple autonomous robot skills sequentially is challenging because each subsequent skill must generalize the results of the previous one, increasing complexity with each step.

Creating a New Data Pool with Human Guidance

Humans can effortlessly execute long-term tasks, but replicating this with robots requires addressing the complexity of these sequential variations. From the user’s perspective, the robot can perform many natural language tasks, abstracting the number of actual models controlling it. This approach allows the integration of single-task models into goal-conditioned models over time.

Single-task models provide a robust foundation for shadow mode evaluations, enabling the team to compare predictions of a new model with the existing reference during testing. When the goal-conditioned model aligns well with single-task model predictions, 1X can transition to a more powerful, unified model without changing the user workflow.

Using a high-level language interface to direct robots opens a new avenue for data collection. Instead of using VR to control a single robot, an operator can direct multiple robots using natural language. Since these directions are sent sporadically, humans do not need to be physically present with the robots and can control them remotely.

Transitioning to Full Autonomy

1X acknowledges that the robots in the video change tasks based on human direction, meaning they are not yet fully autonomous. The next step involves automating the predictions of high-level actions after creating a dataset of vision and natural language command pairs. 1X envisions this being possible with multi-modal, vision-capable language models such as GPT-4, VILA, and Gemini Vision.

Conclusion

1X’s advancements in humanoid robotics represent a significant step towards achieving full autonomy. By using a natural language interface to chain tasks, they address the complexity of sequential task execution and pave the way for future developments in humanoid robots. As they continue to innovate, the potential for humanoid robots to take on increasingly complex and autonomous roles grows, promising exciting possibilities for the future.