Microsoft has announced plans to integrate AMD’s AI chips into its platforms to provide cloud customers with more flexibility and offer an alternative to Nvidia. This move aims to diversify the hardware options available in Microsoft’s cloud services and reduce dependency on a single chip provider.

Expanding Cloud Services with AMD AI Chips

In a recent announcement, Microsoft revealed that it will be offering a platform featuring AMD’s AI chips to its cloud computing customers. These chips will compete directly with components currently provided by Nvidia. More details about this integration are expected to be unveiled at Microsoft’s Build developer conference next week. Additionally, Microsoft plans to showcase its own Cobalt 100 processors at the event.

Combining AMD and In-House Chips

Microsoft will make clusters of AMD’s flagship MI300X AI chips available to customers via its Azure cloud computing service. These chips will serve as alternatives to Nvidia’s H100 chips, which dominate the data center AI chip market but are often difficult to obtain due to high demand. AMD is targeting $4 billion in revenue from its AI chips this year, and Microsoft’s initiative could significantly bolster this goal. Currently, Nvidia controls approximately 80% of the AI chip market for servers.

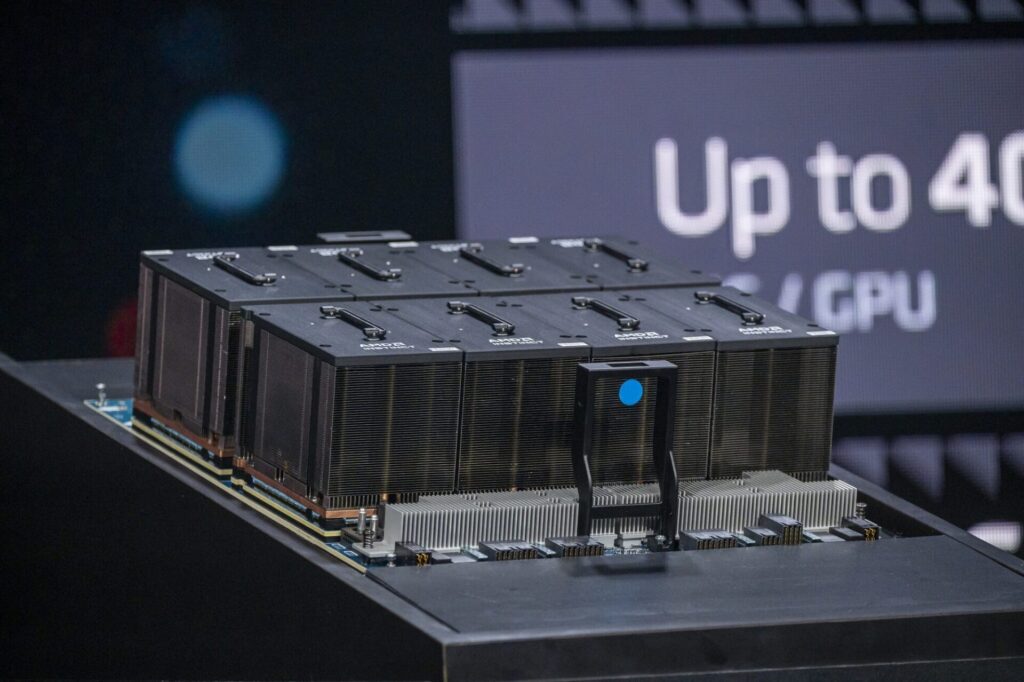

In addition to Nvidia’s chips, Microsoft is also selling access to its own AI chips, named Maia. The company plans to deploy its new Cobalt 100 processors, which are set for a preview next week. According to Microsoft, the Cobalt 100 processors offer 40% better performance compared to other ARM chips on the market. Early adopters of these new chips include Adobe, Snowflake, and others. Announced in November, the Cobalt chips are being tested to power Microsoft’s business messaging tool, Teams, and are positioned to compete with Amazon’s in-house Graviton CPUs.

What do you think about Microsoft’s latest move to integrate AMD’s AI chips into their cloud services? Share your thoughts in the comments below!