The development of Emo: A milestone in robotics

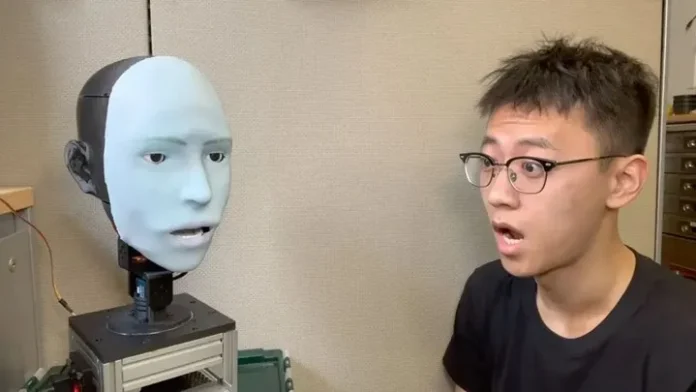

The development of Emo, the robot face that can predict and imitate human facial expressions, marks a major milestone in the field of robotics. This breakthrough technology has the potential to revolutionize the way humans and robots interact, creating a more seamless and natural experience.

Advanced technology behind Emo’s facial expressions

Emo’s ability to detect and predict human facial expressions is a result of advanced machine learning algorithms and sophisticated facial recognition technology. By analyzing thousands of data points on the human face, Emo can accurately anticipate a smile before it even occurs, with an impressive accuracy rate of over 90%. This predictive capability not only allows Emo to imitate human expressions in real-time but also enables it to respond to human emotions and intentions more effectively.

Creating a sense of connection and empathy

Imagine a scenario where Emo is interacting with a group of people. As it scans the faces in the room, it can detect subtle changes in facial expressions and predict the emotions behind them. This synchronized response creates a sense of connection and empathy, making the interaction feel more natural and human-like.

Expanding possibilities for communication and collaboration

Furthermore, Emo’s ability to mimic human facial expressions opens up new possibilities for communication and collaboration between humans and robots. In fields such as healthcare and therapy, where empathy and emotional connection are crucial, Emo can play a vital role. It can provide emotional support to patients, imitating their expressions to create a sense of understanding and comfort. Similarly, in customer service settings, Emo can enhance the customer experience by mirroring their emotions and building rapport.

The future of emotionally intelligent robots

The development of Emo is just the beginning of a new era in robotics. As researchers continue to refine and improve this technology, we can expect to see even more advanced robot faces that not only predict and imitate facial expressions but also understand and respond to complex human emotions. This will pave the way for robots that can truly integrate into our daily lives, assisting us in various tasks and becoming trusted companions.

The research behind Emo’s development

The team at Columbia University embarked on a groundbreaking research project aimed at revolutionizing non-verbal communication in robotics. They recognized the importance of non-verbal cues in human interactions and understood that replicating these cues in robotic faces would be crucial for creating more realistic and engaging human-robot interactions.

Creating dynamic and authentic facial movements

To achieve this, the researchers focused on developing a robot face that could generate dynamic and authentic facial movements. They understood that human facial expressions are not static but constantly changing, influenced by various factors such as emotions, social context, and personal experiences. Therefore, they aimed to create a robot face that could mimic these natural variations in facial expressions.

Analyzing and interpreting human facial expressions in real-time

Using advanced technologies such as computer vision and machine learning, the team developed a sophisticated system that could analyze and interpret human facial expressions in real-time. This system allowed the robot face to respond to the emotional cues of its human counterpart, adapting its own facial expressions accordingly.

Incorporating gestures and body language

Furthermore, the researchers also recognized the importance of non-verbal communication beyond facial expressions. They understood that gestures, body language, and even eye movements play a significant role in human interactions. Therefore, they expanded their research to include these aspects of non-verbal communication as well.

The implications of advancements in non-verbal communication

By incorporating sensors and actuators throughout the robot’s body, the team enabled it to not only generate dynamic facial expressions but also mimic human gestures and body language. This holistic approach to non-verbal communication aimed to create a more immersive and natural experience for human-robot interactions.

Applications in various industries

The implications of these advancements in non-verbal communication are vast. Robots equipped with the ability to accurately interpret and respond to human non-verbal cues can be deployed in various settings, such as healthcare, education, and customer service. They can provide emotional support to patients, assist in teaching complex subjects, and enhance customer experiences.

Bridging the gap between humans and robots

Moreover, these advancements also have the potential to bridge the gap between humans and robots, fostering a sense of trust and empathy. When robots can understand and respond to human non-verbal cues, they become more relatable and approachable, breaking down barriers that may exist between humans and machines.

Challenges in developing a robotic face

Despite the advancements in hardware and software, developing a robotic face still poses several challenges. One of the major hurdles is achieving realistic facial animatronics. The complex designs required to create lifelike facial movements require careful engineering and precise control of the actuators.

Fine-tuning facial expression engines

On the software side, running the facial expression engines correctly and in real-time is of utmost importance to effectively express emotions. The research team employed a binary neural network framework to predict Emo’s facial expressions, encompassing both its own expressions and those of the speaker.

Integrating the robotic face with the robot’s body

Another challenge lies in the integration of the robotic face with the rest of the robot’s body. While the face is undoubtedly the most expressive part of a robot, it must seamlessly blend with the overall design and functionality of the robot.

The future of emotionally intelligent robots

The development of Emo is just the beginning of a new era in robotics. As researchers continue to refine and improve this technology, we can expect to see even more advanced robot faces that not only predict and imitate facial expressions but also understand and respond to complex human emotions. This will pave the way for robots that can truly integrate into our daily lives, assisting us in various tasks and becoming trusted companions.

The research behind Emo’s development

The team at Columbia University embarked on a groundbreaking research project aimed at revolutionizing non-verbal communication in robotics. They recognized the importance of non-verbal cues in human interactions and understood that replicating these cues in robotic faces would be crucial for creating more realistic and engaging human-robot interactions.

Creating dynamic and authentic facial movements

To achieve this, the researchers focused on developing a robot face that could generate dynamic and authentic facial movements. They understood that human facial expressions are not static but constantly changing, influenced by various factors such as emotions, social context, and personal experiences. Therefore, they aimed to create a robot face that could mimic these natural variations in facial expressions.

Analyzing and interpreting human facial expressions in real-time

Using advanced technologies such as computer vision and machine learning, the team developed a sophisticated system that could analyze and interpret human facial expressions in real-time. This system allowed the robot face to respond to the emotional cues of its human counterpart, adapting its own facial expressions accordingly.

Incorporating gestures and body language

Furthermore, the researchers also recognized the importance of non-verbal communication beyond facial expressions. They understood that gestures, body language, and even eye movements play a significant role in human interactions. Therefore, they expanded their research to include these aspects of non-verbal communication as well.

The implications of advancements in non-verbal communication

By incorporating sensors and actuators throughout the robot’s body, the team enabled it to not only generate dynamic facial expressions but also mimic human gestures and body language. This holistic approach to non-verbal communication aimed to create a more immersive and natural experience for human-robot interactions.

Applications in various industries

The implications of these advancements in non-verbal communication are vast. Robots equipped with the ability to accurately interpret and respond to human non-verbal cues can be deployed in various settings, such as healthcare, education, and customer service. They can provide emotional support to patients, assist in teaching complex subjects, and enhance customer experiences.

Bridging the gap between humans and robots

Moreover, these advancements also have the potential to bridge the gap between humans and robots, fostering a sense of trust and empathy. When robots can understand and respond to human non-verbal cues, they become more relatable and approachable, breaking down barriers that may exist between humans and machines.

Challenges in developing a robotic face

Despite the advancements in hardware and software, developing a robotic face still poses several challenges. One of the major hurdles is achieving realistic facial animatronics. The complex designs required to create lifelike facial movements require careful engineering and precise control of the actuators.

Fine-tuning facial expression engines

On the software side, running the facial expression engines correctly and in real-time is of utmost importance to effectively express emotions. The research team employed a binary neural network framework to predict Emo’s facial expressions, encompassing both its own expressions and those of the speaker.

Integrating the robotic face with the robot’s body

Another challenge lies in the integration of the robotic face with the rest of the robot’s body. While the face is undoubtedly the most expressive part of a robot, it must seamlessly blend with the overall design and functionality of the robot.

Addressing power consumption

Furthermore, the team has had to address the issue of power consumption. The facial expression engines and actuators require a significant amount of power to operate, and finding an efficient and sustainable power source has been a challenge.

The future of emotionally intelligent robots

In conclusion, the development of a robotic face is a complex and multifaceted endeavor. It requires a combination of mechanical engineering, software development, and a deep understanding of human emotions. Despite the challenges, the research team has made significant strides in creating Emo, a robot with an impressive range of facial expressions and the ability to predict and replicate the emotions of its human counterpart.

Real-time prediction and synchronization

Through the utilization of mirrored facial expressions, the first neural network interprets motor commands from Emo’s hardware, enabling the robot to predict its own emotional facial expressions. Simultaneously, the second network is trained to predict the facial expressions of the interlocutor during a conversation. As a result of these advancements, Emo successfully predicts human smiles approximately 839 milliseconds earlier and synchronizes its own smile with humans in real-time.

Implications for therapy and entertainment

One area where this technology could have a significant impact is in the field of therapy. Robots like Emo could be programmed to recognize and respond to human emotions, providing support and companionship to those in need. Furthermore, in the entertainment industry, Emo could be used to enhance virtual reality experiences by creating more realistic and immersive interactions.

Improving communication in customer service

Additionally, in customer service settings, Emo could be employed to improve communication and understanding between humans and machines. For example, in call centers, Emo could analyze the facial expressions of customers and provide real-time feedback to the customer service representative, helping them better understand the customer’s emotions and needs.

Continued advancements in human-robot interaction

Overall, the development of Emo and its ability to predict and synchronize facial expressions in real-time represents a significant advancement in human-robot interaction. As this technology continues to evolve, we can expect to see even more innovative applications that bridge the gap between humans and robots, making our interactions with machines more natural and intuitive than ever before.