OpenAI, renowned for ChatGPT and Sora, which made waves in the world of AI, has officially announced its new flagship artificial intelligence model, GPT-4o, capable of real-time logic across text, audio, and imagery. The journey that began with ChatGPT and Dall-E continues to grow with Sora, and OpenAI, the force behind these AI tools, continues to improve its models. In this context, the AI giant recently unveiled GPT-4o, its new flagship model that can perform real-time logic across text, audio, and imagery. We delve into what GPT-4o is, what this model can do, its capabilities, and much more:

OpenAI GPT-4o: What is it, and what does it do?

- GPT-4 level intelligence experience

- Ability to receive responses from both the model and the internet

- Data analysis and chart generation capabilities

- Ability to chat about your captured photos

- Conversational ability through video

- Real-time translation

- Human-like voice, intonation, and facial expressions

- Uploading files for summarization, writing, or analysis assistance

- Access to the GPT Store and using GPTs

- Ability to establish deeper communication with Memory (Remembering previous conversations)

According to OpenAI, GPT-4o is a significant step towards much more natural human-computer interactions; the model accepts any combination of text, audio, and imagery as input and can produce any combination of text, audio, and imagery outputs. The “o” in the naming convention stands for “omni,” referring to the model’s ability to process text, speech, and video.

Enhanced Text, Audio, and Visual Judgement In essence, while providing “GPT-4 level” intelligence, GPT-4o aims to enhance the abilities of GPT-4 in multiple modalities and environments. GPT-4 Turbo, for example, was trained with a combination of images and text and could perform tasks such as generating text outputs from images and identifying the content of these images. GPT-4o adds speech processing to the mix. Consequently, GPT-4o turns ChatGPT into a digital voice assistant. “But how will this be useful? Didn’t ChatGPT already interact?” you might ask. Yes, ChatGPT has had a voice mode that uses a model to convert responses from text to speech for quite some time, but GPT-4o strengthens this, allowing users to interact with ChatGPT like an assistant.

For example, if you asked ChatGPT a question, and it started responding, but you wanted to interrupt to make an additional point or correct a misunderstanding, with the old system, you had to wait for ChatGPT to finish typing or speaking. However, with GPT-4o-powered ChatGPT, you can interrupt the tool, start a new interaction, and continue seamlessly.

Human-Level Vocal Response OpenAI claims that the model offers real-time response capability, even able to produce “a range of different emotional styles” (including singing) by detecting nuances in the user’s voice. Technically, the company can respond to audio inputs in as little as 232 milliseconds. This timing doesn’t mean much on its own; it’s equivalent to an average human response time.

Prior to GPT-4o, using the Voice Mode with ChatGPT had delay times of approximately 2.8 seconds (GPT-3.5) and 5.4 seconds (GPT-4). The old process for Voice Mode involved three separate models: one simple model converted speech to text, another took the text and provided text output, and a third simple model converted this text back into speech. Thus, this process resulted in significant information loss and didn’t allow for nuances like tone or expressions.

One Model for Everything With GPT-4o, a single model processes text, images, and sound from end to end, meaning all inputs and outputs are processed by the same neural network. This is a first for the company, as previous models couldn’t integrate all these modalities. Despite all these developments, OpenAI says they are still in the early stages of exploring what the model can do and its limitations.

Image Analysis and Pocket Translator GPT-4o can also improve ChatGPT’s vision capabilities. When given a photograph—or even a desktop screen—ChatGPT can now quickly provide detailed answers to complex questions (e.g., “What brand is the shirt this person is wearing?”). OpenAI’s CTO, Mira Murati, says these features will continue to evolve in the future.

Currently, GPT-4o can translate a menu in a different language from a single image in real-time, and in the future, the model may allow ChatGPT to watch a live sports match and explain the rules to you, effectively acting as a personal translator. These translations happen instantaneously, as mentioned earlier.

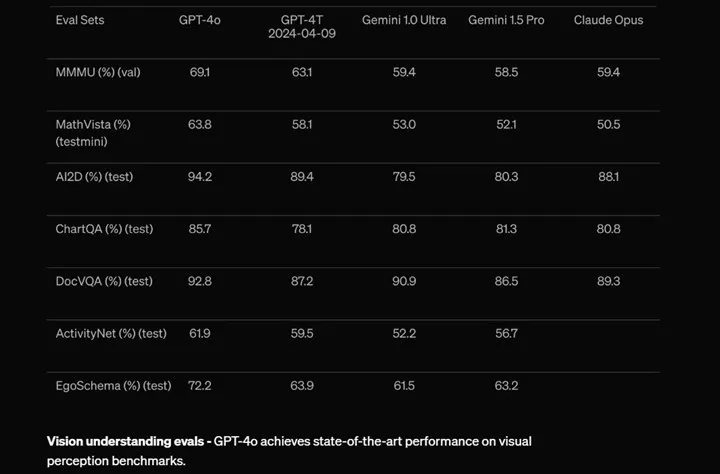

OpenAI claims that GPT-4o is more multilingual and performs better in 50 different languages. The company emphasizes that in OpenAI’s API, GPT-4o is twice as fast as GPT-4 (especially GPT-4 Turbo), half the cost, and has higher speed limits.

Real-time translation is now becoming a “reality” with GPT-4o. In the above example, we see real-time natural language translation in English-Spanish and Spanish-English.

In another example, we see how GPT-4o handles lullabies and whispers. A user asks it to tell a lullaby about a potato and then whispers for it to relay the story. When GPT-4o whispers too softly, the user asks it to raise its voice. Throughout these interactions, the responses and smiley expressions are conveyed to the user.

Surprisingly, GPT-4o can also be super sarcastic.

GPT-4o can even be used to create multiple views of a single image and transform these images into 3D objects. Similarly, it’s possible to create visual narratives. Moreover, you can do this iteratively. In the visual above, a robot writing in a diary is depicted in a first-person view, progressing in three steps based on previous entries.

GPT-4o’s Availability OpenAI sees GPT-4o as a step towards pushing the boundaries of deep learning in terms of practical usability, and the